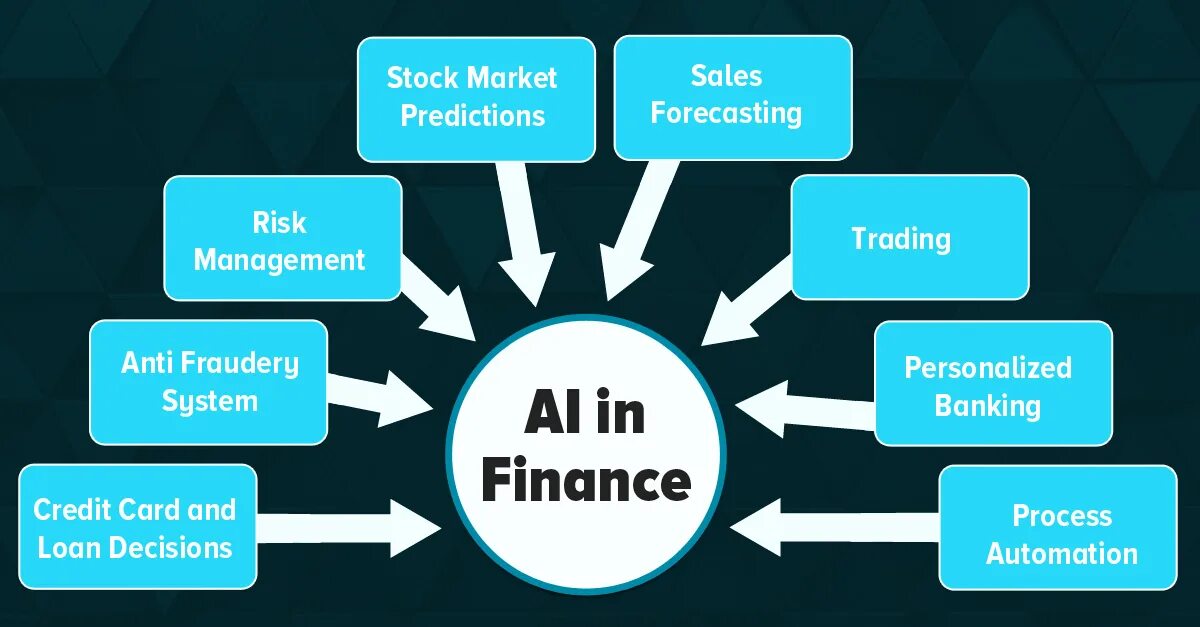

AI has transformed the pace and precision of financial decision-making automating everything from credit assessments to fraud detection and investment guidance. But as algorithms increasingly shape how financial services operate, the demand for transparency has intensified. In fintech, opacity can erode trust faster than any market disruption.

“Speed without explanation is no longer an advantage,” says Eric Hannelius, CEO of Pepper Pay. “If a customer is denied a loan, they want to know why. If an investor sees a portfolio shift, they want to understand the logic behind it. Black boxes don’t scale trust.”

From Automation to Accountability.

Initially, AI was brought into financial services to optimize speed and consistency. Automated decision systems could process applications in seconds and flag anomalies no human could detect. Yet, as AI’s reach expanded, its complexity often came at the cost of interpretability.

Many models now function beyond the scope of traditional auditing, raising serious concerns about fairness, explainability, and systemic bias.

“We’re in a phase where explainable AI is a market expectation,” Eric Hannelius explains. “You can’t just say ‘the model decided.’ That’s not enough for regulators, and it’s not enough for your customers either.”

This shift is creating new product mandates: transparency must be designed, not tacked on.

Designing for Clarity in Complex Systems.

Explainability is no longer confined to developers or compliance teams. It must reach the end user. Fintech companies are now exploring ways to visualize algorithmic decisions in ways that make sense to non-technical audiences.

One example: real-time credit scoring systems that show which factors impacted a decision, such as income volatility or transaction patterns, without disclosing sensitive data or overwhelming the user with math.

Clarity builds comfort, even when the answer is “no.” And when users understand how the system works, they’re more likely to stay with the platform, even after an unfavorable decision.

Balancing IP with Transparency.

One of the trickier challenges in fintech AI is maintaining transparency without exposing proprietary models. Companies are understandably hesitant to disclose too much about how their decision engines function.

Eric Hannelius offers a pragmatic view: “You don’t have to open your source code to earn trust. But you do need to tell the user what kind of logic is in play. Are you using behavioral data? Do certain spending habits affect outcomes? Be up front, because someone else will be, and customers will migrate to them.” The competitive edge now lies in clarity.

Regulatory Pressure Meets Market Demand.

Global regulators are accelerating efforts to standardize AI governance. The European Union’s AI Act and early U.S. frameworks like the Algorithmic Accountability Act are pushing fintech firms to document model behavior, test for bias, and provide accessible explanations.

But the smartest companies aren’t waiting to be told. They’re already integrating fairness audits, bias reduction protocols, and ethical review boards into their development process.

Why? Because the market increasingly rewards responsibility. Investors ask about AI ethics. Customers pay attention to how their data is used. Brand reputation is inseparable from algorithmic integrity.

Teaching Teams to Think Transparently.

Beyond code and compliance, transparency in AI is about culture. It starts with training teams to challenge assumptions embedded in data sets, model choices, and use cases. It continues with recruiting professionals who understand both technology and ethics—and who can ask the right questions when the outputs don’t align with expectations.

“The question isn’t just whether the algorithm works,” Eric Hannelius emphasizes. “It’s whether the outcome makes sense to the people affected by it. If it doesn’t, you’ve built risk into your business whether you see it or not.”

The next generation of fintech innovation won’t be judged solely by its intelligence, but by its transparency. Whether you’re building robo-advisors, lending algorithms, or fraud detection engines, the challenge is the same: can the people impacted by your decisions understand them?

In the race toward smarter systems, clarity is what will separate leaders from liabilities.